Based on the intel movidius myriad x vpu and supported by the intel distribution of openvino toolkit the intel ncs 2 delivers greater performance boost over the previous generation.

Intel movidius neural compute stick price.

Amazon mouser electronics global providers.

This episode of the iot developer show features two demos that showcase the movidius neural compute stick.

Intel corporation introduces the intel neural compute stick 2 on nov.

The neural compute supports openvino a toolkit that accelerates solution development and streamlines deployment.

Speed prototyping for your deep neural network application with the new intel neural compute stick 2 ncs 2.

Intel movidius myriad x vision processing unit vpu supported frameworks.

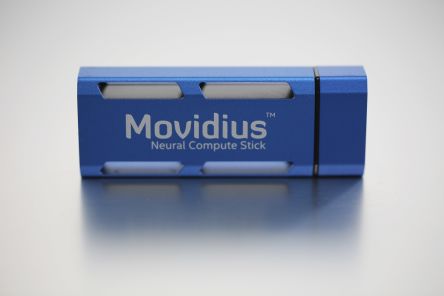

The movidius neural compute stick is a miniature deep learning hardware development platform that you can use to prototype tune and validate your ai programs specifically deep neural networks.

Rs components uk jd china akizuki denshi.

It features the same movidius vision processing unit vpu used to bring machine intelligence to drones surveillance cameras and vr or ar headsets.

Designed to build smarter ai algorithms and for prototyping computer vision at the network edge the intel neural compute stick 2 enables deep neural network testing tuning and prototyping so developers can go from prototyping into production.

72 5 mm x 27 mm x 14 mm operating temperature.

0 c to 40.

Check out the intel movidius neural compute app.

Amazon mouser electronics cdw arrow.

Intel neural compute stick 2 is powered by the intel movidius x vpu to deliver industry leading performance wattage and power.

14 2018 at intel ai devcon in beijing.

Intel movidius vpus enable demanding computer vision and edge ai workloads with efficiency.

Purchase your intel neural compute stick 2 from one of our trusted partners.

The neural compute engine in conjunction with the 16 powerful shave cores and high throughput intelligent memory fabric makes movidius myriad x ideal for on device deep neural.

Tensorflow caffe apache mxnet open neural network exchange onnx pytorch and paddlepaddle via an onnx onversion.

The neural compute supports openvino a toolkit that accelerates solution development and streamlines deployment.

By coupling highly parallel programmable compute with workload specific hardware acceleration in a unique architecture that minimizes data movement movidius vpus achieve a balance of power efficiency and compute performance.

/2020/06/28/4c02c103-a9cc-49f5-900f-a0a81789ab47/4c02c103-a9cc-49f5-900f-a0a81789ab47.jpg)